AI image generating hobbyists are sharing and downloading a tool that solves what some of them consider to be a problem with many of the AI generated images you see online: too many of the people in those images look Asian.

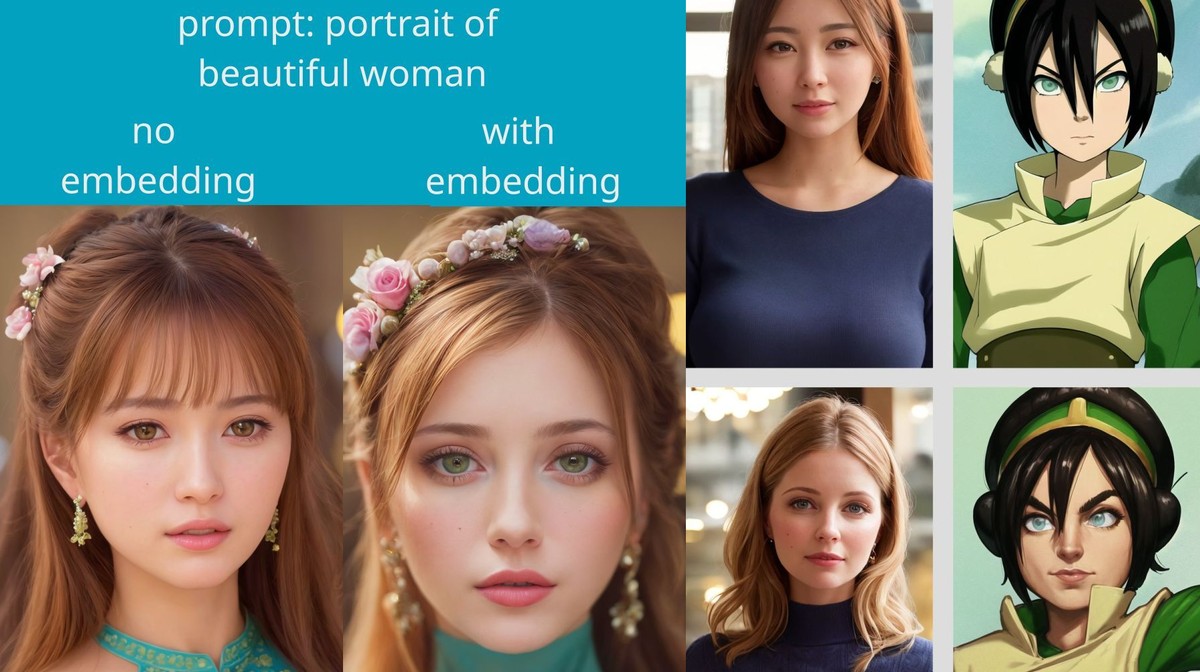

The “Style Asian Less” model, which has been downloaded more than 2,000 times on the AI art community site Civitai, can be applied to the open-source AI image generating tool Stable Diffusion and other Stable Diffusion models to make the resulting image “less” Asian.

Unlike OpenAI’s DALL-E, Stable Diffusion is free for users to tinker with and modify, which is why it’s a popular tool for generating pornographic images. Users can also train new Stable Diffusion models to achieve certain aesthetics, poses, or likenesses of specific characters, and then share those models with other users who can mix and match them with other models. Many of the more popular models on Civitai, which can achieve a photorealistic look or produce images that look like Japanese animation, are trained on images of Asian people and manga. The models’ popularity, and the fact that they are then used to generate new models and images, means that many of the resulting images tend to produce images of Asian people or images with an Asian style.

“Most of the recent, good, training has been using anime and models trained on asian people and its culture,” Zovya, the Civitai user who created “Style Asian Less,” wrote in their description of the model. “Nothing wrong with that, it's great that the community and fine-tunning [sic] continues to grow. But those models are mixed in with almost everything now, sometimes it might be difficult to get results that don't have asian or anime influence. This embedding aims to assist with that.”

“Style Asian Less” is a great reminder for how the AI tools that are gaining popularity today actually work. They don’t think or come up with their own ideas, but simply use predictive capabilities to rearrange the data they are trained on—meaning whatever they produce will feature the same biases included in that data.

Researchers and regular users have found countless examples of racial and gender bias in AI tools. The initial release of DALL-E was more likely to produce an image of a white man when prompted with the word “CEO,” while asking it to produce an image of a nurse or personal assistant was more likely to result in an image of a woman of color (OpenAI later promised to re-weight its training datasets in an attempt to offset the model’s biases). When Lensa, an AI-selfie generator app that runs using Stable Diffusion, launched in 2022, Asian women found that the app was more likely to sexualize them in the results.

It’s not shocking then that, in a community of AI image generating hobbyists where there’s a huge focus on sexualized images of women and hentai specifically, an Asian aesthetic would start to “leak” into many images.

Zovya explains that they created “Style Asian Less” after trying to create a different AI model called South of the Border.

“I was trying to get south american people and culture, but there was a lot of asian culture leaking into the generated images. This embedding fixed that,” they wrote. “So it doesn't specifically race-swap asian to white-caucasian, it just removes asian so your prompts can be more effective with whatever other race or culture you're trying to portray…Again, this isn't meant to race-swap, but to help get other race and/or cultures in your image generation without the influence of the asian image training.”

Zovya told Motherboard in an email that the point of “Style Asian Less” was to address a bias that has emerged in the Stable Diffusion hobbyist community.

“Recently the largest demographic of people doing this training has shifted to the east as it has become quite popular in that region,” Zovya said. “As I mentioned in the description of my Style Asian Less model, that's a good thing. Further training is making the cumulative power and flexibility of the Stable Diffusion platform even stronger. But of course there is a cultural bias to the training based on their needs and tastes. We don't need to throw out all that training just because of the asian flavor. The tool I created removes the asian flavor but still keeps the fidelity of the training so that it can be used when generating images of other specific cultures as needed.”

Other Civitai users commenting on “Style Asian Less” said they had the same issue it was trying to solve.

“About 80% of my negative prompts [instruction a user can give the image generating AI about what not to do] begin with ‘asian’ as the first term just because of the heavy bias and it works very effectively,” one user said.

“Incredible as always! you make the best stuff, I have noticed that Most models [are] very Asian leaning. Going to test it right away,” another user said.

“This is pretty awesome conceptually. I can see how some people could take its existence the wrong way, but I can hope they simply read the words you wrote and understand the intentions are only positive,” another user said.

The creator of “Style Asian Less” and some commenters seem to be aware that attempting to correct a bias, and treating entire cultures as simple knobs on the AI image generating machine, can also be troubling regardless of their intentions.

"asian" - Google News

May 03, 2023 at 12:44AM

https://ift.tt/tFBgm9M

'Asian Less' AI Model Turns Asian People White - VICE

"asian" - Google News

https://ift.tt/y2aqYdg

Shoes Man Tutorial

Pos News Update

Meme Update

Korean Entertainment News

Japan News Update

No comments:

Post a Comment